How to block search engines from crawling your web server

07 Apr 2015

Here's a neat little trick to block search engines from indexing your entire web server contents.

Say you have a web server with many websites that serves as your testing and development sites for your clients. It's happened to the best of us that Google has gone and indexed one of your sites, either because you've forgot to add password protection or you've accidently dropped in the LIVE robots.txt file.

As described here Google respects the: X-Robots-tag.

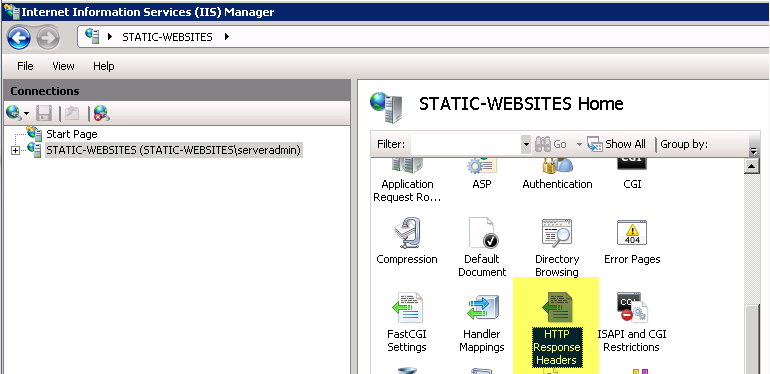

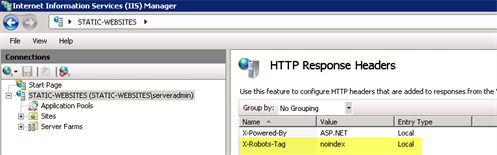

Add the X-Robots-tag tag at your IIS web server level so that it sends a noindex response for every page that is requested from your web server:

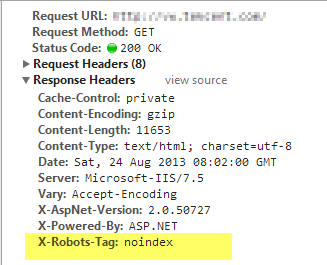

Now inspect that the response headers includes the noindex command.